TL;DR: Memento mori. After reading too much Seneca, I’m meditating on death like a statistician, by counting how many of GRRM’s readers did not even survive to see the HBO show (much less the end of the book series). Rough answer: around 40,000.

No disrespect meant to Martin, his readers, or their families—it’s just a thought exercise that intrigued me, and I figured it may interest other people.

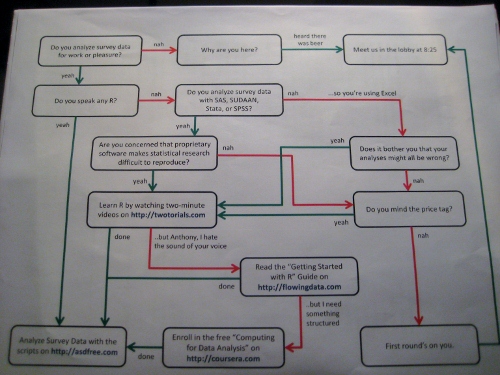

Also, we’ve blogged about GoT and statistics before.

In the Spring a young man’s fancy lightly turns to actuarial tables.

That’s right: Spring is the time of year when the next bloody season of Game of Thrones airs. This means the internet is awash with death counts from the show and survival predictions for the characters still alive.

Others, more pessimistically, wonder about the health of George R. R. Martin, author of the A Song of Ice and Fire (ASOIAF) book series (on which Game of Thrones is based). Some worried readers compare Martin to Robert Jordan, who passed away after writing the 11th Wheel of Time book, leaving 3 more books to be finished posthumously. Martin’s trilogy has become 5 books so far and is supposed to end at 7, unless it’s 8… so who really knows how long it’ll take.

(Understandably, Martin responds emphatically to these concerns. And after all, Martin and Jordan are completely different aging white American men who love beards and hats and are known for writing phone-book-sized fantasy novels that started out as intended trilogies but got out of hand. So, basically no similarities whatsoever.)

But besides the author and his characters, there’s another set of deaths to consider. The books will get finished eventually. But how many readers will have passed away waiting for that ending? Let’s take a look.

Caveat: the inputs are uncertain, the process is handwavy, and the outputs are certainly wrong. This is all purely for fun (depressing as it may be).