While we’re on the subject of statistics-related classroom activities with a “wow factor,” let me bring up my favorite: guessing whether a sequence of coin flips is real or fake.

BS detector

For me, it really brought home the idea that math is an amazing BS detector. Sure, we tell kids to learn math so you can balance your checkbook, figure out the tip at a restaurant, blah blah blah. But consider these very reasonable counterarguments: (1) Yawn, and (2) Calculators/computers do all that for us anyway.

So you have to fire back: you wanna get screwed over? When you sign up for student loans at a terrible rate because the loan officer was friendly and you couldn’t even guesstimate the math in your head, you’ll be stuck with awful payments for the next 10 years. When your phone company advertises “.002 cents per kilobyte” but charges you .002 dollars per kilobyte instead, a hundred times as much, you should call them out on it.

You may never have the luck to acquire a superhero spider sense, but we mortals can certainly hone our number sense. People will try to con you over the years, but if you keep this tool called “math” in your utility belt I guarantee it’ll save your butt a few times down the line.

Coin trick

Anyway, the coin flip thing itself may be more of a cute demo than directly practical — but it’s really really cute. Watch:

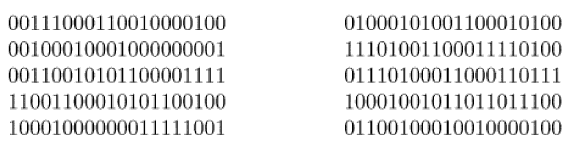

You split the class into two groups. One is going to flip a coin 100 times in a row and write down the resulting sequence of heads and tails. The other is going to pretend they did this and write down a made-up “random” sequence of heads and tails. The teacher leaves the room until both groups are done, then comes back in and has to guess which sequence came from real coin flips and which is the fake. And BAM, like magic, no calculation required, the teacher’s finely-honed number-sense makes it clear which is which.

Can you tell from the pair below?

(example copied from Gelman and Nolan, 2002, Teaching Statistics)

Enterprising statisticians have noticed that, in a sequence of 100 truly random coin flips, there’s a high probability of at least one “long” streak of six or more heads in a row (and same for tails). Meanwhile, people faking the data usually think that long streaks don’t look “random” enough. So the fake sequence will usually switch back and forth from heads to tails and back after only 2 or 3 of each, while the real sequence will have a few long streaks of 5 or 6 or more heads (or tails) in a row.

So is your number sense tingling yet? In the example above, the sequence on the left is real while the right-hand data was faked.

(I’m not sure where this demo originates. I first heard of it in Natalie Angier’s 2007 book The Canon, but it’s also described in Gelman and Nolan’s 2002 book Teaching Statistics mentioned above, and in Ted Hill’s 1999 Chance magazine article “The Difficulty of Faking Data”. Hill’s article is worth a read and goes into more detail on another useful statistical BS detector, Benford’s Law, that can detect patterns of fraudulent tax data!)

So what?

Lesson learned: randomness may look non-random, and vice versa, to the untrained eye. Sure, this is a toy example, but let’s generalize a bit. First, here we have random data generated in one dimension, time. This shows that long winning or losing streaks can happen by pure chance, far more often than most people expect. Say the sports team you manage has been on a winning (or losing) streak — does that mean the new star player is a real catch (or dud)? Maybe not; it might be a coincidence, unless the streak keeps running much longer than you’d expect due to chance… and statisticians can help you calibrate that sense of just how long to expect it.

Or imagine random data generated in two dimensions, spatial data, like mapping disease incidence on a grid of city blocks. Whereas before we had winning/losing streaks over time, now we’ll have clusters in space. We don’t know where they’ll be but we are sure there’s going to be some clustering somewhere. So if neighborhood A seems to have a higher cancer rate than neighborhood B, is there a local environmental factor in ‘hood A that might be causing it? Or is it just a fluke, to be expected, since some part of town will have the highest rates even if everyone is equally at risk? This is a seriously hard problem and can make a big difference in the way you tackle public health issues. If we cordon off area A, will we be saving lives or just wasting time and effort? Statisticians can tell, better than the untrained eye, whether the cluster is too intense to be a fluke.

It’s hard to make good decisions without knowing what’s a meaningful pattern and what’s just a coincidence. Statistics is a crazy powerful tool for figuring this out — almost magical, as the coin flip demo shows.