A few links to share since I’ve been away:

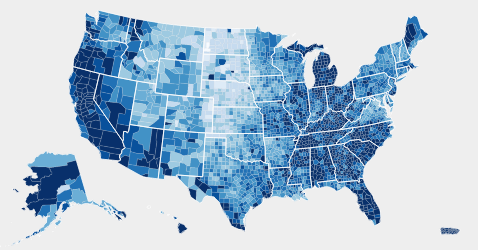

Article on “An American Tradition: The U.S. Census Bureau Continues to Innovate in Data Visualization” (free access after watching an ad): A colleague summarizes some of the many mapping and datavis resources provided by the Census Bureau.

Two interactive web apps by data users (these use Census Bureau data but the datavises are someone else’s products):

- Web app “Point Context”: a data user calls the Census Bureau’s API to find the distributions of age, race, income, and education for residents of the “average” neighborhood containing an arbitrary set of latitude-longitude coordinates.

- Interactive map of “Is the United States spending less on public education?”: A Census data user practices with D3 and tries out the lessons from datavis classes — show comparisons, allow color-blind-safe color palettes, “catchy” headlines and informative annotations help guide readers, etc. I particularly like the arrow indicating where the selected state falls on the colorbar.

Several tools for making maps from Excel or spreadsheet-like tools:

- Esri, the makers of ArcGIS software, have created a Microsoft Office add-on that lets you create maps of your data in Excel. A live demo looked promising, especially if your organization is already an Esri client… but otherwise ArcGIS is not cheap!

- If you do have ArcGIS Online access, you can try using Esri’s “Story Maps” templates. Their published examples include this simple one based on Census Bureau data.

- JMP, a SAS product, also has mapping tools that should be fairly simple for people used to spreadsheets. But again, SAS’s products tend to be expensive too.