The final summary of last week’s symposium on statistics and data visualization (see part 1 and part 2)… Below I summarize Chris Volinsky’s talk on city planning with mobile data, and the final panel discussion between the speakers plus additional guests.

“A great way to do visualization is to print it out and stick it on the wall; physical manifestations beat small screens.”

You may know Chris Volinsky, of AT&T Labs, as part of the team that won the Netflix prize. The present talk was on a rather different topic: “shaping cities of the future using mobile data.” His research collaborators on this project included Simon Urbanek, whose R-in-the-cloud work I saw at useR! this year; as Volinsky put it, Urbanek is the team’s “data visualization mastermind” — what a great job title 🙂

Volinsky tied this work to the recent trend in using open data for city planning: see websites like Crimes in Chicago, Plow Tracker, and Street Bump, as well as events like DataGotham. These sites often use public records or crowdsourced data; but since Volinsky works at AT&T, they’ve been seeing what’s possible with mobile phone data. He reassured us about the efforts they go to when anonymizing the data, removing any content besides time and location (and even that’s only info about the nearest tower, to within 1 sq mile rather than GPS precision), and going through IRB review before beginning any new research project.

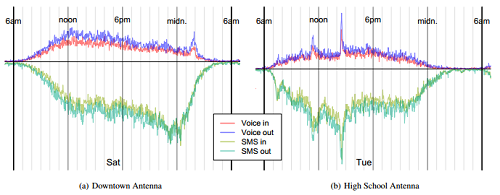

For starters, the team looked at profiles of voice usage and text usage at each cell phone tower, plotted by time of day and day of week. Plotting the voice time series above and texting time series below, with both peaking mid-day, the plots look like a pair of lips, hence the name “lip plots” (see example above).

The nifty thing is that a lip plot can make it quite clear where the cell phone tower must be. The plot above on the left, showing Saturday night with a call peak around 2am, must be in a commercial area where the bars close at 2am. The plot on the right, with more texting than calls overall and a few regular spikes at 11am and 3pm, must be near a high school: kids text continuously but even more so during breaks, and then have to call their text-unsavvy parents once school lets out. Volinsky’s group printed out a huge poster of these lip plots for the towers near their workplace around Morristown, NJ, and found it much easier to talk over a poster than a small screen. As they discussed patterns with the city planners, they realized this data could provide evidence in favor of spending money on late-night shuttles leaving downtown bar scene — at least if they knew where most of the callers were going home to.

The next project involved attempting to track drivers’ trajectories: who drives downtown, do they go the same route each day, and in general can we encourage them to take other routes? However, remember that they don’t have GPS data, only the pattern of cell phone tower handoffs; and this is extremely variable. In areas with many towers, you can drive the same route several times and end up with a different handoff pattern each time. The team drove the major routes into and out of Morristown repeatedly to collect data about handoff patterns and build a classification algorithm.

The classification step required a distance metric so they could decide which route best matched a given temporal/tower-order pattern. Volinsky introduced us to the (new to me) Earth Mover Distance. Imagine you have a dump truck and two piles of dirt: in order to make one pile look like the other, what’s the least possible cost (amount of dirt moved * distance the dirt is moved)? Or in Volinsky’s case, if you have a pile of cell-phone minutes and the pattern of their cell-tower handoffs, which Morristown route minimizes the minutes you need to move and the time-distance you need to move them in order to match the typical handoff pattern for that route?

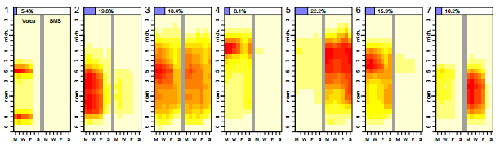

This EMD metric is useful for many other problems. Another classification example was when Volinsky’s team tried to cluster cell phones into groups by finding similar usage patterns across time of day, day of week, and voice vs text usage. EMD turned out to work for this too. See these heatmaps of the chosen clusters, and how they make sense: people who never text and only call right before or after work, never on evenings or weekends; people who call throughout the work day; people who call and text all day; etc.

With all these intriguing results — and the suggestion that AT&T may sell a service for such data/analyses to city planners — the audience wondered how much we should worry that their mobile users aren’t a random sample. Volinsky says they’ve studied what the biases are, and frankly the bigger concern should be the distinction between smart-phone and regular-cell-phone users. There was also some discussion of developing apps where people could opt-in to let their provider track GPS data (and use it for more detailed research) in exchange for something worthwhile — useful information, lower phone bills, etc. However, the participants for this kind of opt-in would be even more biased… I gather that many of us statisticians are desperate for better guidance (whether theory or even just rules of thumb) regarding how to work with non-random samples.

“You guys talk — I’ll go ahead and do it.”

Actually, during the panel discussion it turned out that statisticians have many concerns about how to do our jobs better. (I guess that’s statistical thinking in the spirit of Rob Kass’ definition: we’re still trying to evaluate and improve our tools/approach, even at the meta level.) Some of my favorite points:

- Our traditional static graphics no longer cut it for exploratory data analysis, even in R: we need to do more with interactivity, using JavaScript and suchlike. And instead of focusing on the mean effect or the optimal parameter, use sliders to show the effect of different parameters; why worry about pre-computing the optimal bin width for a histogram instead of trying several bin widths interactively?

- “Big data is whatever can’t be read into R easily” 😛 You can get started by sampling the data and using traditional approaches, but eventually you’ll hit the “tension between scaling down your data and scaling up your models.” Carl Morris suggested breaking the data not into random samples but into homogenous subsets, then scaling up — start selectively on subgroups where different simple models work, then fit the models together hierarchically and tell a story about how they connect.

- Statisticians should treat data integration and preprocessing as a bigger concern; they can affect the results and reliability of our analyses

- Our role as statistical modelers should be to make scientific problems simpler for others, while giving a sense of the variability and uncertainty due to our simplifications

- In “big data” projects, consider the 4 V’s: volume of data, velocity at which it accrues, variety of the population it covers, and veracity of the source

- Statisticians visualize data but tend to perform inference about models one by one, whereas an audience member (a student of Ben Shneiderman) said that the information visualization community focuses on inference from the visualizations themselves, exploring the data interactively rather than going back to the code to rerun a new model. Di Cook suggested the infovis folks could pay more attention to how randomness in the data might look (as in her earlier talk), not just how to visualize data in new ways; and statisticians could learn from them a lot about presenting data in more accessible and elegant ways.

- Too many statisticians can only do what’s easy in R; we need to learn more diverse coding skills and hold our own. Computer science folks find it easier to just go ahead and compute whatever can be crunched, often with great precision, but we’re needed too since we care about what’s meaningful to estimate. CS folks might not pay as much attention as we do to whether the output will be useful signal or random noise given the uncertainty of the inputs & the model. We’re the “gurus of variability.” CS folks have had massive successes, such as Google searches that use very little statistical thinking and lots of CS magic — but in a Google search it’s okay to get some unwanted results, since the cost is low of trying a few results. It’s quite different when studying biomarkers and health data — patients can’t just try a few expensive, risky procedures and hope one works.

- Jim Berger suggested a proper research team would ideally include a statistician, and a probabilist, and a mathematician, and a computer scientist, besides all the subject matter experts… but in practice the team’s only funded to hire one “quant” and they get whomever they can, perhaps not even knowing the difference between our specialties. Statisticians are sometimes marginalized by the hiring of coders and designers, who can show off their skills more easily to someone who doesn’t realize they really need a statistician instead. And of course teams are not always the answer: there’s so much more you can do if you do learn many diverse skills yourself — as Mark Hansen showed us.

- Sometimes it’s most important just to do something rather than spend time optimizing the math or tweaking the code. Martin McIntosh told the story of a couple of statisticians who spent weeks trying to find the best model for finding a certain spot in the brain from image data. A new intern solved the issue in a day by putting all the images in a PowerPoint deck and scanning through them quickly, marking the approximate spot in each; the collection of eyeballed spots turned out to be good enough for the experimenters to move ahead with their research. So although we may be the “lawyers of the quantitative sciences” (in Chris Volinsky’s words), and our rigor about assumptions may usually be right, there are times when it’s worth just trying a few approaches and seeing what works best.

So there you have it. We are the gurus of uncertainty, the hub or the glue keeping science together, the lawyers and cops keeping it honest, the managers ensuring quality at all steps of the process… yet we’re expected to move quickly and keep it as simple as possible (while tracking the effects of our simplifications). In order to do my job well, I need to know not only the traditional statistical theory and rules of thumb, but also a lot about programming (in many languages: R, SAS, C, JavaScript, Python); numerical optimization; data management and databases; visual design (incl. specialized aspects such as cartography and color perception)… and that’s not even including subject-matter expertise about whatever it is I’m actually analyzing. It may be a little overwhelming, but these are exciting times to be a statistician.

Thanks again to all the organizers, speakers, and panelists for a thoroughly fascinating day!