Hats off to my classmate Alex Reinhart for publishing his first book! Statistics Done Wrong: The Woefully Complete Guide [website, publisher, Amazon] came out this month. It’s a well-written, funny, and useful guide to the most common problems in statistical practice today.

Although most of his examples are geared towards experimental science, most of it is just as valid for readers working in social science, data journalism [if Alberto Cairo likes your book it must be good!], conducting surveys or polls, business analytics, or any other “data science” situation where you’re using a data sample to learn something about the broader world.

This is NOT a how-to book about plugging numbers into the formulas for t-tests and confidence intervals. Rather, the focus is on interpreting these seemingly-arcane statistical results correctly; and on designing your data collection process (experiment, survey, etc.) well in the first place, so that your data analysis will be as straightforward as possible. For example, he really brings home points like these:

- Before you even collect any data, if your planned sample size is too small, you simply can’t expect to learn anything from your study. “The power will be too low,” i.e. the estimates will be too imprecise to be useful.

- For each analysis you do, it’s important to understand commonly-misinterpreted statistical concepts such as p-values, confidence intervals, etc.; else you’re going to mislead yourself about what you can learn from the data.

- If you run a ton of analyses overall and only publish the ones that came out significant, such data-fishing will mostly produce effects that just happened (by chance, in your particular sample) to look bigger than they really are… so you’re fooling yourself and your readers if you don’t account for this problem, leading to bad science and possibly harmful conclusions.

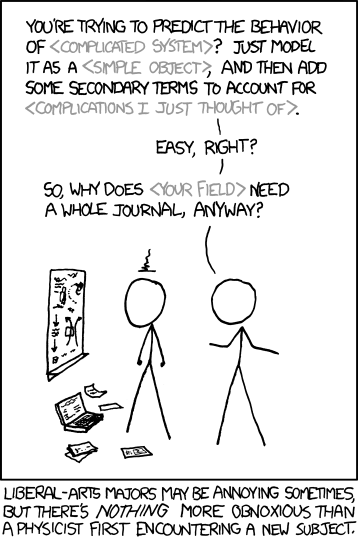

Admittedly, Alex’s physicist background shows in a few spots, when he implies that physicists do everything better 🙂 (e.g. see my notes below on p.49, p.93, and p.122.)

Seriously though, the advice is good. You can find the correct formulas in any Stats 101 textbook. But Alex’s book is a concise reminder of how to plan a study and to understand the numbers you’re running, full of humor and meaningful, lively case studies.

Highlights and notes-to-self below the break:

- p.7: “We will always observe some difference due to luck and random variation, so statisticians talk about statistically significant differences when the difference is larger than could easily be produced by luck.”

Larger? Well, sorta but not quite… Right on the first page of chapter one, Alex makes the same misleading implication that he’ll later spend pages and pages dispelling: “Statistically significant” isn’t really meant to imply that the difference is large, so much as it’s measured precisely. That sounds like a quibble but it’s a real problem. If our historical statistician-forebears had chosen “precise” or “convincing” (implying a focus on how well the measurement was done) instead of “significant” (which sounds like a statement about the size of what was measured), maybe we could avoid confusion and wouldn’t need books like Alex’s. - p.8: “More likely, your medication actually works.” Another nitpick: “more plausibly” might be better, avoiding some of the statistical baggage around the word “likely,” unless you’re explicitly being Bayesian… OK, that’s enough nitpicking for now.

- p.9: “This is a counterintuitive feature of significance testing: if you want to prove that your drug works, you do so by showing that the data is inconsistent with the drug not working.” Very nice and clear summary of the confusing nature of hypothesis tests.

- p.10: “This is troubling: two experiments can collect identical data but result in different conclusions. Somehow, the p value can read your intentions.” This is an example of how frequentist inference can violate the likelihood principle. I’ve never seen why this should bother us: the p-value is meant to inform us about how well this experiment measures the parameter, not about what the parameter itself is… so it’s not surprising that a differently-designed experiment (even if it happened to get equivalent data) would give a different p-value.

- p.19: “In the prestigious journals Science and Nature, fewer than 3% of articles calculate statistical power before starting their study.” In the “Sampling, Survey, and Society” class here at CMU, we ask undergraduates to conduct a real survey on campus. Maybe next year we should suggest that they study how many of our professors do power calculations in advance.

- p.19: “An ethical review board should not approve a trial if it knows the trial is unable to detect the effect it is looking for.” A great point—all IRB members ought to read Statistics Done Wrong!

- p.20-21: A good section on why underpowered studies are so common. Researchers don’t realize their studies are too small; as long as they find something significant among the tons of comparisons they run, they feel the study was powerful enough; they do multiple-comparisons corrections (which is good!), but don’t account for the fact that they will do these corrections when computing power; and even with best intentions, power calculations are hard.

- p.21: A scary illustration of misuse and misunderstanding of significance testing. Several small studies of right-turns-on-red (RTOR) showed no significant difference in accident rates at intersections with & without RTOR… so it became widely legalized. But later, larger analyses have shown that there is a real, nontrivial increase in accident rates when RTOR is allowed—it’s just that those earlier studies were underpowered. Remember that “not significant” does not mean we’ve proven there is no effect—we’ve only proven that our study is too small to measure the effect accurately! This example is from an article by Hauer, “The harm done by tests of significance.”

- p.23: Yes! Instead of computing power, we should do the equivalent to achieve a desired confidence interval width (sufficiently narrow). I hadn’t heard of this handy term “assurance, which determines how often the confidence interval must beat our target width.”

I’ve only seen this approach rarely, mostly with survey/poll sample size planning: if you want to say “Candidate A has X% of the vote (plus or minus 3%)” then you can calculate the appropriate sample size to ensure it really will be a 3% margin of error, not 5% or 10%.

I would much rather teach my students to design an experiment with high assurance than to compute power… assuming we can find or create good assurance-calculation software for them:

“Sample size selection methods based on assurance have been developed for many common statistical tests, though not for all; it is a new field, and statisticians have yet to fully explore it. (These methods go by the name accuracy in parameter estimation, or AIPE.)” - p.49-50: “Particle physicists call this the look-elsewhere effect… they are searching for anomalies across a large swath of energies, any one of which could have produced a false positive. Physicists have developed complicated procedures to account for this and correctly limit the false positive rate.” Are these any different from what statisticians do? The cited reference may be worth a read: Gross and Vitells, “Trial factors for the look elsewhere effect in high energy physics.”

- p.52: “One review of 241 fMRI studies found that they used 207 unique combinations of statistical methods, data collection strategies, and multiple comparison corrections, giving researchers great flexibility to achieve statistically significant results.” Sounds like some of the issues I’ve seen when working with neuroscientists on underpowered and overcomplicated (tiny n, huge p) studies are widespread. Cited reference: Carp, “The secret lives of experiments: methods reporting in the fMRI literature.” See also the classic dead-salmon study (“The salmon was asked to determine what emotion the individual in the photo must have been experiencing…”)

- p.60: “And because standard error bars are about half as wide as the 95% confidence interval, many papers will report ‘standard error bars’ that actually span two standard errors above and below the mean, making a confidence interval instead.” Wow—I knew this is a confusing topic for many people, but I didn’t know this actually happens in practice so often. Never show any bars without clearly labeling what they are! Standard deviation, standard error, confidence interval (which level?) or what?

- p.61: “A survey of psychologists, neuroscientists, and medical researchers found that the majority judged significance by confidence interval overlap, with many scientists confusing standard errors, standard deviations, and confidence intervals.” Yeah, no kidding. Our nomenclature is terrible. Statisticians need to hire a spin doctor. Cited reference: Belia et al., “Researchers misunderstand confidence intervals and standard error bars.”

- p.62: “Other procedures [for comparing confidence intervals] handle more general cases, but only approximately and not in ways that can easily be plotted.” Let me humbly suggest a research report I co-wrote with my (former) colleagues at the Census Bureau, covering several ways to visually compare confidence intervals or otherwise make appropriate multiple comparisons visually: Wright, Klein, and Wieczorek, “Ranking Population Based on Sample Survey Data.”

- p.90: Very helpful list of what to think about when preparing to design, implement, and analyze a study. There are many “researcher degrees of freedom” and more people are now arguing that at least some of these decisions should be made before seeing the data, to avoid excessive flexibility. I’ll use this list next time I teach experimental design: “What do I measure? Which variables do I adjust for? Which cases do I exclude? How do I define groups? What about missing data? How much data should I collect?”

- p.93-94: “particle physicists have begun performing blind analyses: the scientists analyzing the data avoid calculating the value of interest until after the analysis procedure is finalized.” This may be built into how the data are collected; “Other blinding techniques include adding a constant to all measurements, keeping this constant hidden from analysts until the analysis is finalized; having independent groups perform separate parts of the analysis and only later combining their results; or using simulations to inject false data that is later removed.” Examples in medicine are discussed too, such as drafting a “clinical trial protocol.”

- p.109: Nice—I didn’t know about Figshare and Dryad, which let you upload data and plots to encourage others to use and cite them. “To encourage sharing, submissions are given a digital object identifier (DOI), a unique ID commonly used to cite journal articles; this makes it easy to cite the original creators of the data when reusing it, giving them academic credit for their hard work.”

- p.122-123: Research results from physics education: “lectures do not suit how students learn,” so “How can we best teach our students to analyze data and make reasonable statistical inferences?” Use peer instruction and “force students to confront and correct their misconceptions… Forced to choose an answer and discuss why they believe it is true before the instructor reveals the correct answer, students immediately see when their misconceptions do not match reality, and instructors spot problems before they grow.”

I tried using such an approach when I taught last summer, and I found it very useful although a bit tricky since I couldn’t find a good bank of misconception-revealing questions for statistics that’s equivalent to the physicists’ Force Concept Inventory. But for next time I’ll check out the “Comprehensive Assessment of Outcomes in Statistics” that Alex mentions: delMas et al., “Assessing students’ conceptual understanding after a first course in statistics.”

I’ve also just heard about the LOCUS test (Levels of Conceptual Understanding in Statistics)—may also be worth a look. - p.128: “A statistician should be a collaborator in your research, not a replacement for Microsoft Excel.” Truth.

I admit I don’t like how Alex suggests most statisticians will do work for you “in exchange for some chocolates or a beer.” I mean, yes, it’s true, but let’s not tell everybody that the going rate is so low! Surely my advice is worth at least a six-pack.