The final year! Our 2nd baby was on the way, and the job search was imminent. Oh, and by the way there’s this little thing called “finishing your dissertation”…

Previous posts: the 1st, 2nd, 3rd, 4th, 5th, 6th, 7th, and 8th semesters of my Statistics PhD program.

Research

This year my advisor was able to place me on half-TA’ing grant support. So I asked to have all my TA’ing pushed to the spring, with the intent of finishing the bulk of my thesis work this fall. I figured that in the spring I’ll be OK to grade 10 hrs/wk on my own time while helping with the new baby (due in Dec), as long as the thesis is mostly done.

However, job hunting took a HUGE amount of time. That’ll be its own post. (Also the spring TA duties turned into something larger, but that’s a story for the next post.)

In other words, thesis research progress was… minimal, to put it mildly.

Other projects

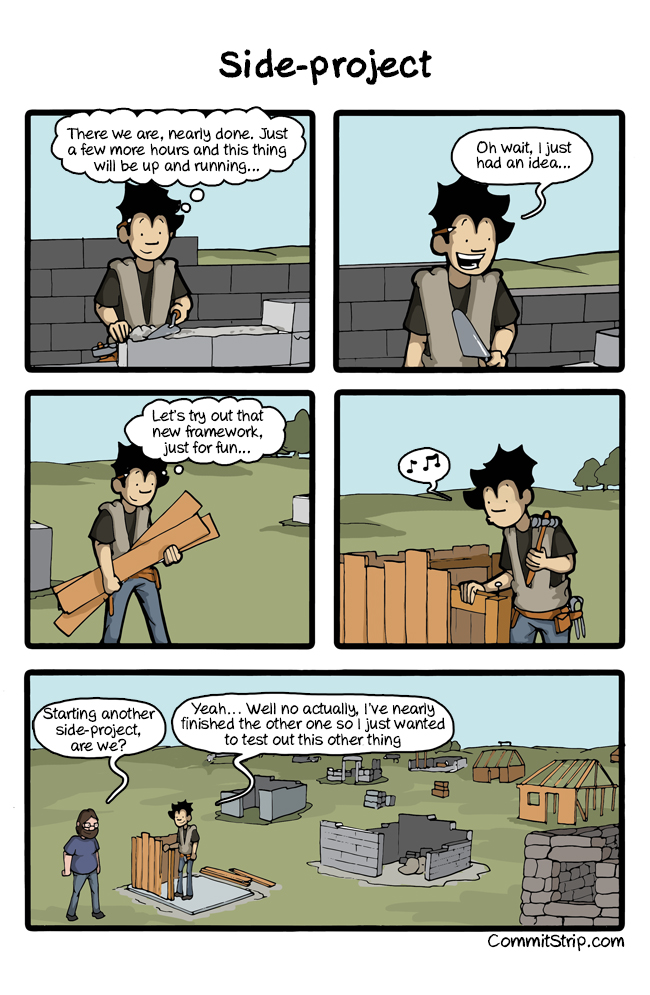

Well, OK, so there’s another reason my thesis work was slow: I jumped onto two new short-term projects that (1) were very much in my wheelhouse, and (2) seemed like great fodder for job applications and interviews. Both of them arose thanks to Ryan Tibshirani. Unsurprisingly, it turns out that it’s GREAT for a grad student to be on good terms with a well-known professor who gets too many requests for interesting projects and occasionally passes them on to students. In both cases, it was fantastic of Ryan to think of me, and although it’s been tough to be doing it just right now, this is legitimately the kind of research I want to do later on (and probably should have done for my thesis in the first place! argh…).

First, knowing that I’m interested in education, Ryan asked if I’d like to help with some consulting for Duolingo, the language-learning app company (also founded by CMU folks and still Pittsburgh-based). The folks there had some interesting questions about experimental design and suitable metrics for A/B testing their app. One of our contacts there was Burr Settles, who wrote a helpful book on Active Learning (the ML technique, not the pedagogy). We had some good discussions after digging into the details of their problem: challenges of running permutation tests on massive datasets, whether old-school tests like sign-rank tests would be better for their goals, how the data-over-time structure affects their analyses, etc. These chats even led me to an old Portland State classmate’s work regarding how to get confidence intervals from a permutation test, and I have some ideas for extensions on that particular problem.

Second, knowing that I had experience with survey data, Ryan passed on to me an email request to review some new methodology. Varun Kshirsagar, working on the Poverty Probability Index (PPI), had made some revisions to this poverty-tracking method and wanted to get feedback from someone who understood both modern statistics & machine learning tools as well as the kind of complex-survey-design datasets used to estimate the PPI models. It was a real pleasure working with Varun, and thinking about how to combine these two worlds (ML and survey data) had been a huge part of my motivation to go back to grad school in the first place and attend CMU in particular. We wrote up a short paper on the method for the NIPS 2017 workshop on ML for the Developing World, and we won the best paper award 🙂 I plan to revisit some of these ideas in future research: How do we run logistic-regression lasso or elastic net with survey-weighted data? How should we cross-validate when the survey design is not iid?

Teaching

Alex and I also continued running the Teach Stats mini. This semester we decided it was a bit embarrassing that Statistics, of all fields, doesn’t have a better standardized assessment of what students are learning in our intro courses. Without such a tool, it’s hard to do good pedagogy research and give strong evidence about whether your interventions / new methods have any impact.

There are already a couple of assessment instruments out there, but most were written by psychologists or engineers rather than by statisticians. Also, many of their questions are procedural, rather than about conceptual understanding. Even though these assessments have passed all the standard psychometric tests, there’s no guarantee that whatever they measure is actually the thing we’d *like* to measure.

So we started discussing what we’d like to measure instead, drafting questions, and planning out how we would validate these questions. Inspired by my own experiences in user-experience research at Olin and Ziba, and with the help of an article on writing good assessments for physics education by Adams and Wieman, we started planning think-aloud studies. The idea is to watch students work through our problems, talking out loud as they do so, so that we can see where they trip up. Do they get it wrong just because the question is poorly worded, even if they understand the concept? Do they get it right just because you can eliminate some choices immediately, even if they don’t understand the concept at all?

We ran a pilot study this fall, and at the end of the semester, I had my first chance to help draft an IRB application—hurrah for statisticians actually working on research design!

This summer and fall, I also kept up with an informal History of Stats reading group organized by fellow PhD student Lee Richardson. Again, these journal-clubs and reading-groups have been my favorite part of the PhD curriculum, and I wish more of our “real” classes had been run this way.

Life

?!? “Life”? Not much that I can recall this semester.

Next up

The 10th and final semester of my Statistics PhD program.