Here’s another post on life as a statistics PhD student (in the Department of Statistics, at Carnegie Mellon University, in Pittsburgh, PA).

The previous such post was After 1st semester of Statistics PhD program.

Classes:

- I feared that Advanced Probability Overview would be just dry esoteric theory, but Jing Lei ensured all the topics were really well-motivated. Although it was tough, I did better than I’d hoped (especially given that I’ve never taken a proper Real Analysis course). In Statistical Machine Learning, Larry Wasserman and Ryan Tibshirani did a great job of balancing “old” core theory with new cutting-edge research topics, including helpful homework assignments that gave us practice both in theory and in applications.

- My highlight of the semester was being able to read and digest a research paper that was way too abstract when I tried reading it a few years ago. It really hit me that I must be learning something in grad school 🙂

(The paper was Building Consistent Regression Trees from Complex Sample Data, by Toth and Eltinge. While working at Census, I wanted to try running a complex-survey-weighted regression tree, but I couldn’t get much out of this paper. Now, after a good dose of probability theory and machine learning, it’s far clearer. In fact, I have some ideas about extending this work!) - The Statistical Machine Learning class referenced a ton of crazy math terms I wasn’t familiar with: Banach and Hilbert spaces, Lp norms, conjugate functions, etc. It terrified me at first—I’ve never even heard of this stuff, should I have taken grad-level functional analysis before I started this PhD, am I about to fail?!?—but it turns out a lot of it is just names for specific versions of general concepts that I already knew. Whew. Also, most of it got used repeatedly from topic to topic, so we did gain familiarity even without explicitly taking a functional analysis course etc. So, don’t get disheartened too easily by unfamiliar terminology!

- It was great to finally learn more about Lp norms and about splines. Also, almost everything in SML can be written as a penalized regression 😛

- Smoothing splines and Reproducing Kernel Hilbert Space (RKHS) regression are nifty because the setup is that you want to optimize over all possible functions. So you start out with an infinite-dimensional space, for which in general there might be no simple way to search/optimize! … But in these specific setups, we can prove that the optimal solution happens to lie in a finite-dimensional subspace, where your usual optimization/search tools will work after all. Nice.

- Larry had a nice “foundations” day in SML, with examples where Bayes and Frequentist analysis differ greatly. However, I didn’t find most of his examples too convincing, since the Bayesian “loses” only due to a stupid choice of priors; or the Bayesian “loses” for finite n but in a case where n in practice would have to be ridiculously large. Still, this helped stretch my thinking about how these inference philosophies differ.

- Larry points out: you often hear that “We might as well go Bayes because if you give people a Frequentist interval, they’ll interpret it as a Bayes interval.” But the reverse is also true: Give someone a sequence of 95% Bayes intervals, and they’ll expect 95% of them to contain the true value. That is NOT necessarily going to happen with Bayes CIs (unlike Frequentist CIs).

- In addition to Subjective, Objective, Empirical, or Calibrated Bayes, let me propose “Cynical Bayes”: Don’t choose a prior because you believe it. Instead, choose one to optimize your estimator’s Frequentist properties. That way you can keep your expert Freq’ist colleagues happy, yet still call it a Bayes estimator, so you can give the usual Bayes interpretation to keep nonexperts happy 🙂

- A background in Statistics will keep you thinking about distributions and probabilities and convergences. But a background in Applied Math may be better at giving you tools and ideas for feature engineering. It’s worth having both toolsets.

- The Advanced Probability Overview course covered some measure-theoretic probability. I’m finally understanding the subtleties of how the different convergences

,

,

, and

all differ, and why it matters. We saw these concepts last semester in Intermediate Statistics, but the distinctions are far clearer to me now.

- AdvProb’s measure theory section also really helped me understand why textbooks say a random variable is a “function”: intuitively it seems like just a variable or a number or something… but in fact it really is a function, from “the state of the world” i.e. an element

of the set

of all possible outcomes or states of the world, to the measurement you will collect (often a number on the real line). Finally, this measure theory view of probability, as the size of a subset of

, is helpful. Even though statisticians’ goal is to develop tools that let them work with the range of the random variable and ignore the domain

, it’s good to remember that this domain exists.

- However, measure theory and probability theory suffer from some really poor terminology! For example, it took me far too long to realize that “integrable” means “the integral is finite”, NOT “the integral exists.”

- When we teach students R, we really should use practical examples, not the arbitrary generic examples that you see so often. Instead of just showing me

list(1,"a"), it helps to give a realistic example of why you may actually need to collect together numeric and character elements in a single object.

Research:

- I started a new research project, the Advanced Data Analysis project, which will run until the end of this upcoming Fall semester (so about a year total). I am working with Rob Kass and Avniel Ghuman on using magnetoencephalography (MEG) data to study epilepsy.

- At Rob’s research group meetings, I learn a ton from the helpful questions he asks. When presenting someone else’s work (i.e. for a journal club), ask yourself, “What would you do if *your* research was based on the data from this paper?” Still, I’ve found I really do need to keep scheduling weekly 1-on-1 meetings—the group meetings are not enough to stay optimally on track.

- Neuroscience is hard! Pre-processing massive neuroscience datasets using not-fully-documented open source software is particularly hard. When I chose this project, I did not realize how much time I would have to spent on learning the subject matter, relevant specialized software tools, and data pre-processing workflow. Four months in and I’ve still barely gotten to the point of doing any “real” statistics. It’s a good project and I’m learning a lot, but it’s disheartening to see how much of that learning has been tied to debugging open-source software installations that I’ll only ever use again if I stay in this sub-field.

I would advise the next PhD cohort to choose projects that’ll primarily teach you more general-purpose, transferable skills. Maybe take an existing theoretical method that’s not implemented in software yet, and make it into an R package?

Life:

- This was a tougher semester in many ways, with harder classes and more research-related setbacks. The Cake song Tougher than it is got a lot of play time on my headphones 😛

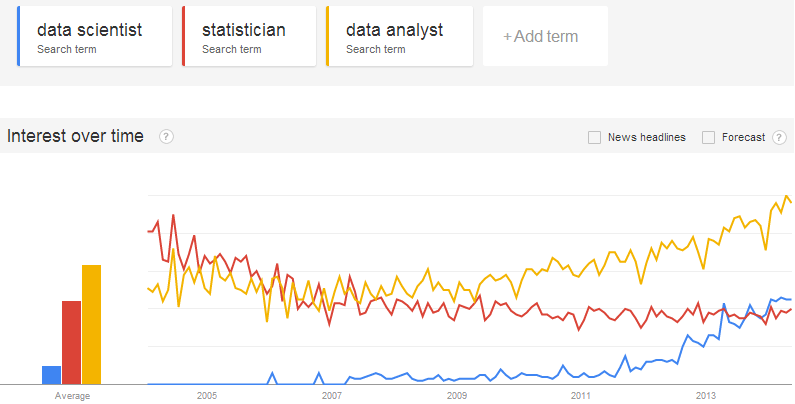

- I’m glad that despite my slow posting rate, the blog still kept getting regular traffic—particularly Is a Master’s degree in Statistics worthwhile? I guess it’s a burning question these days.

- A big help to my sanity this semester came from joining the All University Orchestra. After a long week of tough classes and research setbacks, it’s great to switch brain modes and play my clarinet. I’ve really missed playing for the past few years in DC, and I’m glad to get back into it.

- Pittsburgh highlights: Bayernhof museum, Pittsburgh Symphony Orchestra concerts (The Legend of Zelda, “Behind the Notes” talks), Jozsa Corner, Point Brugge Cafe, sampling all the Squirrel Hill pizzerias, MCMC Bar Crawl on the Southside Flats, riding the ridiculously steep inclines, Pittsburgh Area Theater Organ Society concerts and tours of their beautiful theater organ

- Things still on our list to do in Pittsburgh: see a CMU theater performance, Pittsburgh aviary and zoo, Kennywood amusement park, Steelers game, Penguins game

- I look forward to getting a chance to teach a whole course this summer. It’ll be 36-309, Experimental Design. I also took some Eberly Center seminars, and the department organized helpful planning meetings for those of us students who’ll teach in the summer, so I feel reasonably prepared.

I plan to have my students design a series of experiments to bake the ultimate chocolate chip cookie. It will be delicious. I baked Meg Hourihan’s mean chocolate chip cookies for a department event earlier this spring, which seems like an appropriate start.

However, ironically, as the local knitr / reproducible research fanboy… I’m supposed to teach the course using SPSS, which seems to be largely point-and-click, without much support for reproducible reports 🙁 - It was a nice difference to be on the other side of the department’s open house for admitted students this year 🙂 I’m also happy to be reading Grad Cafe forums from a much more relaxed point of view this year!

- I’m surprised there’s not much crossover between the CMU and UPitt statistics departments. And the stats community outside each department doesn’t seem as vibrant as it was in DC. I attended the American Statistical Association’s Pittsburgh chapter banquet. Besides CMU and Pitt folks, most attendees seemed to be RAND employees or independent consultants. There are also some Meetup groups: the Pittsburgh Data Visualization Group and the Pittsburgh useR Group.

- I’ve updated and expanded my CMU blogroll in the sidebar. Please let me know if I missed your CMU/Pittsburgh statistics-related blog!

Other people’s helpful posts on the PhD experience:

- Nathan Yau (of FlowingData) looks back on his own PhD experience

- The Guardian’s “Five things successful PhD students refuse to do”

- Matt Might’s 3 qualities of successful Ph.D. students and Illustrated guide to a PhD

- Philip Guo’s Advice for new Ph.D. students and How To Be Effective. “Do less” and “Ask for help” are critical!

Next up: the 3rd, 4th, 5th, 6th, 7th, 8th, 9th, and 10th semesters of my Statistics PhD program.