A while back I recommended Nathan Uyttendaele’s beginner’s guide to speeding up R code.

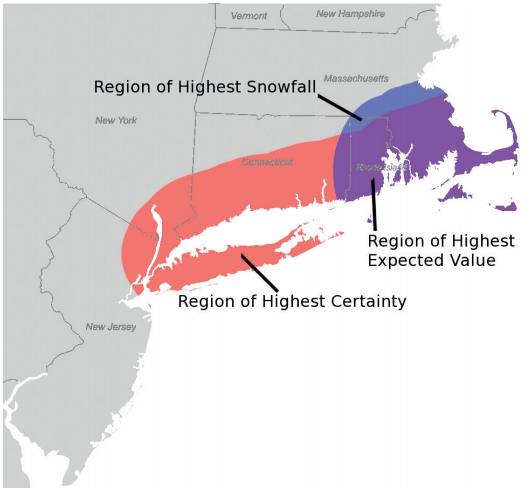

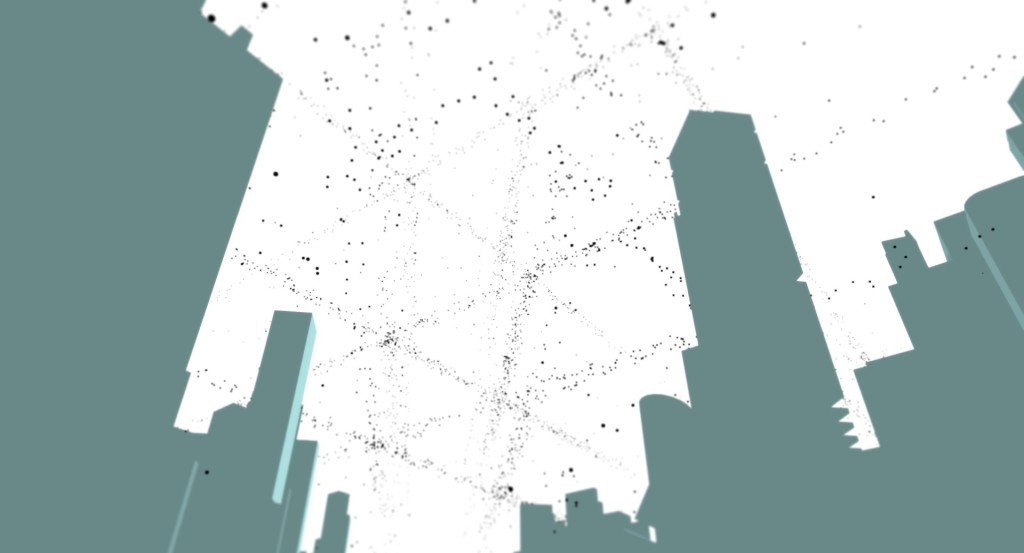

I’ve just heard about Nathan’s computer game project, DotCity. It sounds like a statistician’s minimalist take on SimCity, with a special focus on demographic shifts in your population of dots (baby booms, aging, etc.). Furthermore, he’s planning to program the internals using R.

Consider backing the game on Kickstarter (through July 8th). I’m supporting it not just to play the game itself, but to see what Nathan learns from the development process. How do you even begin to write a game in R? Will gamers need to have R installed locally to play it, or will it be running online on something like an RStudio server?

Meanwhile, do you know of any other statistics-themed computer games?

- I missed the boat on backing Timmy’s Journey, but happily it seems that development is going ahead.

- SpaceChem is a puzzle game about factory line optimization (and not, actually, about chemistry). Perhaps someone can imagine how to take it a step further and gamify statistical process control à la Shewhart and Deming.

- It’s not exactly stats, but working with data in textfiles is an important related skill. The Command Line Murders is a detective noir game for teaching this skill to journalists.

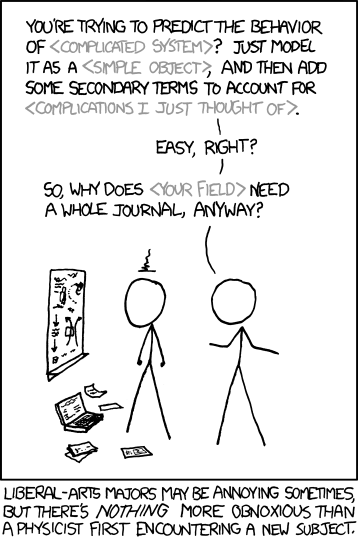

- The command line approach reminds me of Zork and other old text adventure / interactive fiction games. Perhaps, using a similar approach to the step-by-step interaction of swirl (“Learn R, in R”), someone could make an I.F. game about data analysis. Instead of OPEN DOOR, ASK TROLL ABOUT SWORD, TAKE AMULET, you would type commands like READ TABLE, ASK SCIENTIST ABOUT DATA DICTIONARY, PLOT RESIDUALS… all in the service of some broader story/puzzle context, not just an analysis by itself.

- Kim Asendorf wrote a fictional “short story” told through a series of data visualizations. (See also FlowingData’s overview.) The same medium could be used for a puzzle/mystery/adventure game.